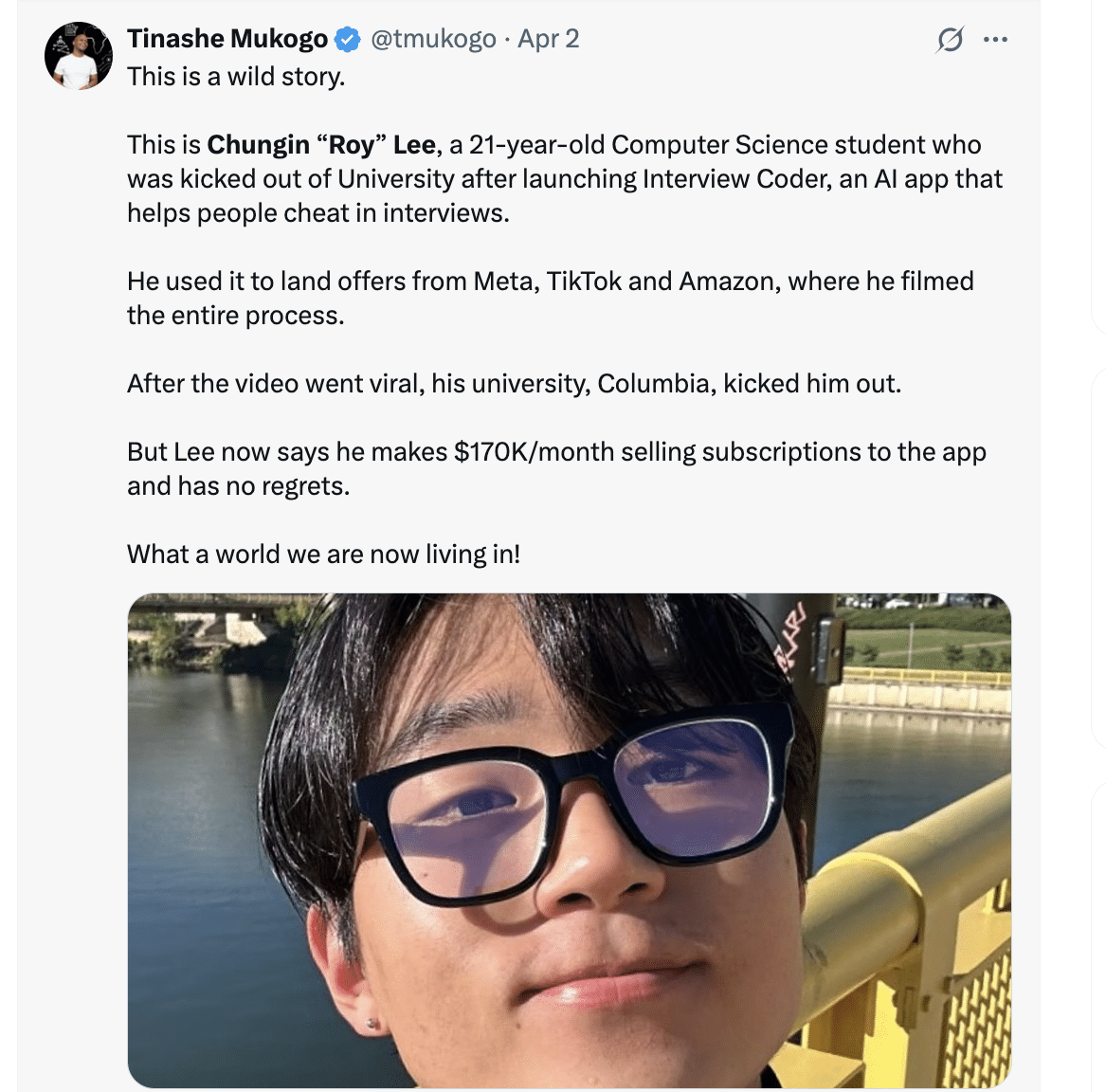

From School Suspension to Startup, highlights a Recent Case of how a Columbia Student Turned AI Cheating into a Funded Venture.

Chungin “Roy” Lee, a 21-year-old Columbia University undergraduate, found himself at the center of controversy after using an AI tool to gain unfair advantages. In early 2025, Lee was suspended from Columbia University for co-creating an AI software that secretly assisted him during technical coding interviews. The interviews led to coveted internships at tech giants like Amazon and Meta.

Lee and his classmate co-founder, Neel Shanmugam, developed an application called “Interview Coder” that helped to automate the solving of programming problems in real-time. Columbia’s administration viewed the tool as a violation of academic integrity and pursued disciplinary action. By March 2025, Lee announced he had been kicked out of Columbia over the incident.

Facing this punishment, Lee and Shanmugam chose to drop out of the Ivy League school and take their idea outside the campus walls. Lee’s rationale for creating the cheating tool was rooted in frustration. As a computer science student, he had spent countless hours practicing contrived coding puzzles (like those on the LeetCode platform) for interviews—exercises he felt were “outdated and a waste of time” compared to real-world software development.

In Lee’s view, the rules of the game were broken: tech companies expected candidates to ignore modern tools and “pretend that AI doesn’t exist” during evaluations. He saw Interview Coder as a form of protest against this disconnect – a way to expose the absurdity of banning helpful technology in assessments.

The tool acted as an ‘invisible’ AI assistant. It could see interview questions, listen to audio, and feed him real-time solutions without the interviewer’s knowledge. By doing this, Lee even managed to ace interviews and land an internship offer at Amazon, until the company caught on. Amazon later stated that all candidates must pledge not to use unauthorized tools in interviews, implicitly condemning Lee’s tactic.

After a since-deleted demo of Interview Coder went viral on social media and drew backlash from companies like Amazon and Meta, Columbia swiftly intervened.

From Cheating Tool to Funded Startup and The Birth of Cluely

Rather than back down, only weeks after his suspension, the duo rebranded their project as “Cluely” – an AI startup with a bold slogan: “cheat on everything.” Cluely took the original Interview Coder concept and vastly expanded its scope. No longer limited to coding interviews, Cluely’s software aims to provide undetectable, real-time AI assistance in any situation, whether it’s job interviews, school exams, sales calls, or meetings.

The tool operates through a hidden in-browser window or overlay that is invisible to others (for example, an interviewer or proctor cannot see it on a screen share.

In practice, Cluely acts like an invisible co-pilot, instantly generating responses to help users navigate interviews, exams, or meetings on the fly. Share on X

One early user description said the program could even take screenshots of questions, use optical character recognition to parse them, and then have an AI model produce and input the answers in real-time. In short, it’s a digital co-pilot designed explicitly to cheat by covertly handling tasks that would normally rely on the user’s own knowledge or skill. Lee portrays Cluely not as a cheating scandal but as a technological inevitability. In an online manifesto, the startup compares its AI helper to inventions like the calculator or spell-check tools once derided as “cheating” but eventually embraced in everyday use.

“They called calculators cheating. They called Google cheating. The world will say the same about AI,” Cluely’s team declared defiantly on social media. Lee insists that using AI in this manner “isn’t even really cheating” at all, arguing that whenever technology makes people smarter or more efficient, society initially panics but later adjusts and accepts it as normal.

In his view, Cluely is simply “redefining cheating” for the AI era. The startup’s philosophy is that humans shouldn’t refrain from using powerful tools at their disposal; rather than competing against machines, people should “grow with them” – a vision of seamless human-AI collaboration, even in traditionally rule-bound settings like exams and interviews.

$5.3 Million to Build a “Cheat on Everything” Platform

The controversy did little to scare off investors. It arguably fueled Cluely’s rise. In April 2025, just a month or so after his expulsion, Roy Lee announced that he had secured a $5.3 million funding round to grow Cluely. The seed financing was co-led by venture firms Abstract Ventures and Susa Ventures, among other backers. “I got kicked out of Columbia for building Interview Coder, an AI to cheat on coding interviews. Now I raised $5.3 million to build Cluely, a cheating tool for literally everything,” Lee wrote bluntly in a celebratory LinkedIn post.

The funding would be used to hire a team and further develop the platform’s capabilities to, in Cluely’s words, “build the future — faster.” With the funding announcement, Cluely launched publicly and moved its base to San Francisco. The team even released a slick promo video that showcased the tool’s potential (and its pitfalls) in everyday life.

In the satirical video, which some viewers thought resembled a scene from Black Mirror, Lee is on a dinner date, covertly using Cluely to feed him facts and witty answers. The AI helps him bluff about his age and art knowledge, until the interaction turns awkward, illustrating that technology can’t fix every human situation.

Opinions on Social Media

The ad’s edgy humor generated mixed reactions online, but it succeeded in grabbing attention. On Twitter (X), some users praised the concept as a “wake-up call” about technology in society, while others slammed it as “dystopian” and unethical. “Imagine making a Black Mirror episode as a product ad,” one commenter quipped, underscoring how polarizing Cluely’s debut was. Despite the controversy, Cluely quickly attracted users. Lee, now the CEO of his startup, told TechCrunch that by April 2025, the service had already surpassed $3 million in annual recurring revenue from subscriptions.

This suggests that many people, including job seekers, students, and others, are willing to pay for an undetectable edge in their high-stakes tasks. The sheer speed from dorm-room project to revenue-generating startup (in the span of a few months) turned Lee into a minor tech celebrity.

Tech blogs dubbed him and his co-founder the “Ivy League bad boys” of AI for their brazen approach

Far from repentant, the young founder seemed to relish the role of disruptor. Cluely’s website proudly touts the tagline “cheat on everything”, and its messaging frames the product as ushering in a new normal: “Every single time technology has made people smarter, the world panics… And suddenly, it’s normal,” Lee wrote, predicting that AI assistance will soon be as commonplace (and accepted) as using spellcheck.

From School Suspension to Startup: How a Columbia Student Built a $5.3M AI “Cheating” Empire Share on X

Reactions and Fallout for Academia and Industry

Cluely’s rise has prompted a mix of admiration, alarm, and debate across educational and tech communities. At Columbia University, Lee’s alma mater, the reaction was swift and severe. School officials saw the mere creation of a cheating facilitator as antithetical to their honor code. They moved to suspend both Lee and Shanmugam, even though Interview Coder was not used on any school coursework directly. Lee was more surprised by the flood of disciplinary notices from Columbia than by the internship offers he received after acing the interviews.

After the students withdrew under pressure, a Columbia Spectator report on the incident was pointedly titled, “This isn’t even really cheating.” Columbia University declined to discuss details of the case with the press, citing privacy regulations. In the tech industry, reactions were also harsh. Upon learning that Lee had duped their hiring process, Amazon reportedly blacklisted Roy Lee – effectively barring him from employment there.

Amazon’s View of the Situation

An Amazon spokesperson, when asked about the situation, emphasized that all job candidates must agree not to use unauthorized aids during interviews. (In other words, what Lee did is considered cheating under Amazon’s rules and grounds for disqualification.) Meta (Facebook) was also reportedly unhappy about having its internship interview compromised. The incident put big companies on notice that AI could be used to undermine their hiring filters. It wouldn’t be surprising if these firms now update their interview procedures or detection methods in response. Public opinion on the Cluely saga spans the spectrum. Many educators and professionals condemned the platform for encouraging dishonesty.

To them, Cluely represents exactly what responsible AI ethicists have warned about. That is using AI not as a learning aid, but as a way to evade learning and cheat the system. Academic integrity experts worry that normalizing such tools will tempt students (or job applicants) to bypass actually mastering material, raising questions about competence and fairness.

On social media, numerous commentators lambasted Cluely’s founders for profiting from unethical behavior, with some likening the startup’s pitch to a dystopia where no one can be trusted to be genuine. Share on X

Are There Others Who Sympathize?

At the same time, some voices have come to Lee’s defense. Sympathizers argue that he merely exposed an inconvenient truth: if AI so easily games our evaluation systems, perhaps the fault lies in those outdated systems rather than the student. “Was it cheating? Probably yes…But what if the rules no longer make sense?” one industry analyst mused, noting the irony that developers today are expected to “build with AI” on the job even as they’re forbidden from using AI during interviews or tests.

This perspective suggests that Lee’s stunt forced a conversation about whether hiring practices and school exams need to evolve in an AI-pervasive world. For his part, Roy Lee remains unapologetic. He acknowledges bending the rules, but he frames himself as a forward-thinker challenging irrational norms. “Everyone told me to quit,” he wrote of the period after his expulsion, “but I ignored all advice and kept going…you need to swing big if you ever wanna make it.” His determination paid off in the form of a multi-million-dollar company.

Lee seems to relish proving his earlier point on a grander scale: “They called Google cheating… The world will say the same about AI. We’re not stopping.” That bravado has made him something of a folk hero to certain tech-savvy teens and college students who feel constrained by academic rules. However, it has equally made him a cautionary tale to others.

Implications for Education, Ethics, and Youth Tech Culture

The saga has quickly become a flashpoint in debates over education and AI ethics. On the one hand, it highlights the inadequacy of traditional institutions in preparing for the era of generative AI. Schools and universities worldwide are grappling with a new reality: students now have access to AI tools that can perform a wide range of tasks, from writing essays to solving complex mathematical equations. Academic honor codes are being put to the test. In some cases, institutions are reacting aggressively – even paradoxically – to assert that cheating will not be tolerated. For example, Emory University recently suspended two student entrepreneurs for developing an AI-based study tool, despite the university’s business school having awarded them a prize for that very idea just months prior.

Emory’s administration feared that the tool “Eightball” could enable cheating, illustrating the delicate balance between encouraging innovation and upholding academic integrity. The incident at Emory, much like Columbia’s response to Cluely, highlights how schools are scrambling to update their rules for an era where an algorithm might be used to do a student’s homework. <em>Educators are now asking: How do we assess learning fairly when AI can be an invisible helper?

Change in Education Loading

Some have called for a return to in-person, closed-book exams and oral assessments that AI cannot easily infiltrate. Others suggest integrating AI into teaching and testing, effectively changing the rules so that using AI is not considered cheating, but rather part of the skill set being evaluated.

This mirrors the conversation in industry: if developers will have AI-based assistants on the job, should they be allowed to use them in interviews? The Cluely episode exposes a generational rift in attitudes. Many students and young professionals see AI as just another tool – akin to a calculator – that they should leverage. They ask why they should be penalized for using the best tools available to get results. However, most educators and employers insist that a clear line must remain between legitimate assistance and a deceptive shortcut.

Cluely, by explicitly positioning itself on the “deceptive” side of that line, it forces a confrontation with the gray areas. It’s prompting institutions to clarify their policies on AI usage and to invest in AI-detection countermeasures. Indeed, even as Cluely touts itself as “completely undetectable,” independent developers have already started working on detection software. One programmer claimed to have a prototype that can recognize when Cluely is being used, hinting at an arms race between cheat-tech and anti-cheat-tech.</p>

Ethics and AI

The ethical implications run deep. Is it okay to use a machine’s intelligence in place of your own when you’re expected to demonstrate personal mastery? Traditional ethics say no – that it undermines the integrity of qualifications and can be unfair to those who play by the rules. AI ethicists worry that normalizing tools like Cluely could erode the very notion of merit and honesty.

The ethical implications run deep. Is it okay to use a machine’s intelligence in place of your own when you’re expected to demonstrate personal mastery? Traditional ethics say no – that it undermines the integrity of qualifications and… Share on X

If a medical student used an undetectable AI to pass board exams, for instance, the consequences could be dangerous. The “cheating as a service” model raises questions about trust: how can we trust grades, degrees, or job competence if we aren’t sure who is answering – the person or an algorithm? Cluely’s founders counter-argue that society will adapt, just as it did to calculators (which once prompted similar debates about “mental math” skills)

There is an ongoing discussion about drawing boundaries when it comes to AI assistance. For example, they might be acceptable in some low-stakes settings, but forbidden in high-stakes licensure exams. Finally, this story has significant implications for youth and tech culture. Lee’s trajectory from college suspension to Silicon Valley success sends a complex message to young tech enthusiasts. In one sense, it’s inspirational. A kid with an idea challenging the status quo, defying authority, and succeeding on his terms. This type of maverick narrative has been a part of tech mythology. For example, think of famous dropouts who have built billion-dollar companies. It may encourage other students to pursue bold tech projects, although preferably without violating school rules. While inspirational to some, it also delivers a troubling lesson that blatant rule-breaking and ethical compromise can be rewarded with fame and fortune.

For Parents and Educators

For many parents and educators, there is a concern that teenagers might perceive Roy Lee as someone who “gamed the system.” They see that they have gotten rich and are potentially glamorizing academic dishonesty. The role modeling effect is real. If a high-profile student openly cheats and then secures venture capital, others may be tempted to follow suit rather than pursue their studies. This puts pressure on institutions to respond decisively so as not to appear to condone such behavior.

Ultimately, the story of the suspended student-turned-CEO raises many questions. It has shed light on the flaws in our educational and professional evaluation systems in the era of AI. As one commentator noted, Lee’s exploit “exposed a fundamental disconnect in how we think about developer skills, AI tools, and what it means to be ‘qualified’ in 2025.”

The world of education and work is at an inflection point; it is either the rules and methods adapt to accommodate AI, or we will see increasingly elaborate efforts to enforce bans on AI “cheating.” Now, with enterprising youths like Roy Lee testing those defenses. The story has already prompted vigorous debates among teachers, hiring managers, and ethicists. From now on, it may spur the development of new policies, such as updates to the honor code and revisions to the interview process.

It’s a reminder that technology’s rapid advancement often outpaces our rules. When everyone has an all-knowing AI in their ear, what counts as cheating?

TL;DR

When AI Becomes Cheating: What Every Nigerian Parent Needs to Know About Roy Lee’s Cluely Saga

Quick Take: A U.S. student was suspended for using AI to ace coding interviews—then raised $5.3 million to build Cluely, a platform that lets anyone “cheat on everything.” What does this story mean for how we raise and educate children in a world of generative AI? Read on for key facts, why you should care, and how to talk to your children about using technology ethically.

Who Is Roy Lee and What Is Cluely?

Roy Lee, a Columbia student, created the tool Cluely—a browser overlay that provides real-time AI answers during interviews, exams, or meetings. Venture capitalists quickly backed the idea, and by April 2025, the startup had secured $5.3 million in seed funding and reached $3 million in annual recurring revenue.

Start Talking to Your Children About AI and Integrity

Use the Roy Lee story to spark an open dialogue:

-

“Would you trust a doctor who cheated on board exams?”

-

“How would you feel if classmates used Cluely while you studied hard?”

-

“Where do we draw the line between smart tools and dishonesty?”

-

Encourage children to share their views before you jump in with rules

Practical Tips: Helping Children and Teens Use AI Ethically

-

Set Family Tech Rules – e.g., “AI can brainstorm ideas but can’t write your entire essay.”

-

Check School Guidelines – Align home rules with your child’s school policy.

-

Supervise Major Assignments – Request to review rough drafts and the thought processes behind them.

-

Model Honesty – Let kids see you cite sources and admit mistakes.

-

Teach Competence First, AI Second – Ensure Core Skills Are Solid Before Allowing AI Shortcuts.

Roy Lee’s meteoric rise from suspension to startup shows how quickly technology—and the definition of cheating can evolve. As a result, the lesson is clear. We can’t shield our children from AI. However, we can guide them to use it responsibly.

Read Also: How to Navigate ChatGPT as a Parent